Most intelligent version of Curiosio launched on 01/23. Labeled Beta3. As smart as Beta2 but 100x faster. Intro and details at Ingeenee Engineering Blog.

Most intelligent version of Curiosio launched on 01/23. Labeled Beta3. As smart as Beta2 but 100x faster. Intro and details at Ingeenee Engineering Blog.

Start from this cool comparison of Mathematics and Physics by Richard Feynman. Physicists are always about the special case. Mathematicians are always about the general case. Physicists do reverse engineer the world; recreating the technologies, available in the Universe. Physicists even think beyond the Universe…

Continue with these ruminations about Mathematics by Stephen Wolfram – was mathematics invented or discovered. He thinks that the math is already there, we just need to get to those spaces.

Here are details on the Computing Theory of Everything, by Stephen Wolfram. Like Galileo Galilei invented the telescope to observe and discover the far space, Wolfram invented and invents tools to discover the math, all those spaces. It is not combinatoric mess, as the spaces could be shaped nicely, depending on the laws within. Look at this amazing Rule 30, look at this annoying Rule 184.

Think of forthcoming Quantum Computing, which is closer to what Feynman foresaw about machinery without mathematics (watch first video, from 6:00 to 7:30). Why we need an infinite computational power, based on mathematics & logic, to figure out what happens in the tiny place in space? Pretty modern supercomputer needs few hours to simulate 10^11 individual atoms, which is ~10^11 times smaller than the number of atoms in only 1 gram of iron (Fe)…

But about simulating the new worlds, at the level of individual atom. We could build a simulation, and it will go with mathematics. We just need to squeeze the computational power from the physical universe.

Are mathematics and physics converging?

PS. Everything above physics in understood. Chemistry deals at bigger sizes. And so on upwards to huge sizes… till the edge of the Universe, where we still don’t understand. But maybe the Math will help here?

Since mankind developped some good intelligence, we [people] immediately started to discover our world. We walked by foot until we could reach. We domesticated big animals – horses – and rode horses to reach even further, horizontally and vertically. So we reached the water. Horses could not bring us across the seas and oceans. We had to create new technology, that could carry people above the water – ships.

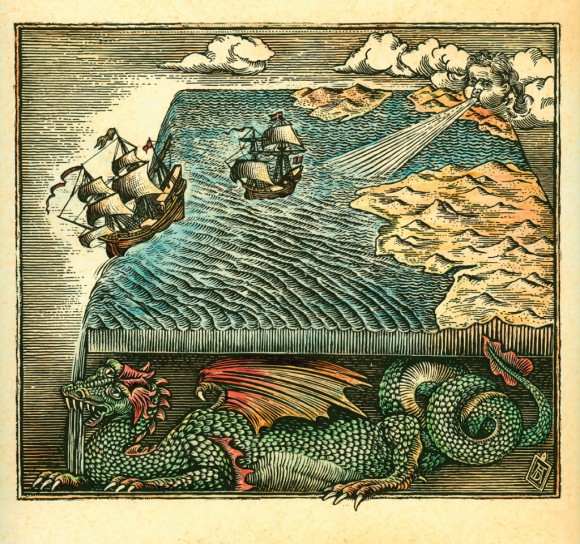

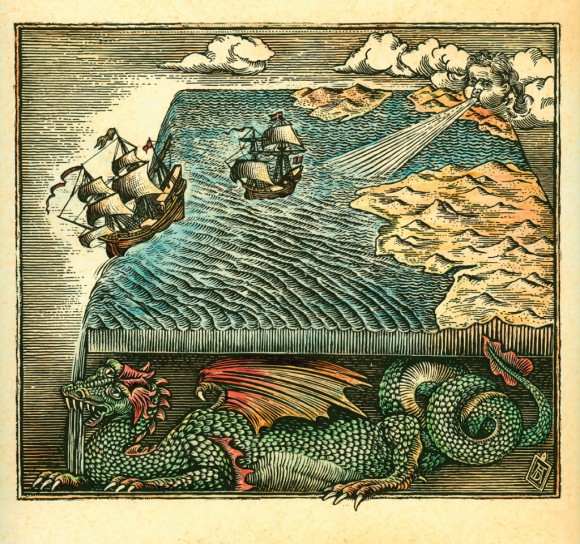

Fantasy map of a flat earth — Image by © Antar Dayal/Illustration Works/Corbis

Ship building required pretty much calculation itself. And ship only is not sufficient to get there. Some navigation needed. We developped both measurement and calulcation of wood and nails, measurement of time, navigation by stars and sides of the world. That was kind of computing. Not the earliest computing ever, but good enough computing that let us to spread the knowledge and vision of our [flat] world.

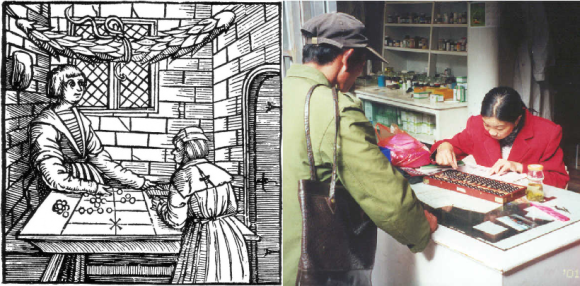

Early device for computing was abacus. Though it is usually called a calculating tool or counting frame, we use word computing, becuse this topic is about computing technology. Abacus as computing technology was designed with size bigger than a man, and smaller than a room. Then the wooden computing technology miniaturized to desktop size. This is important: emerged at the size between 1 and 10 meters, and got smaller in time to fit onto dektop. We could call it manual wooden computing too. Wooden computing technology is still in use nowadays in African countries, China, Russia.

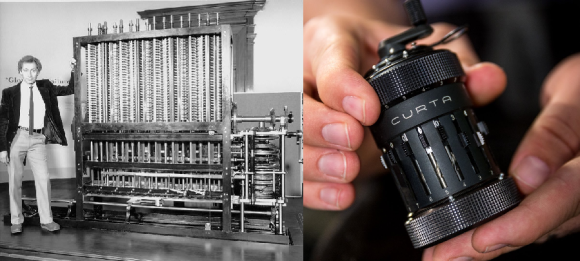

Metal computing emerged after wooden. Charles Babbage designed his analytical engine from metal gears, to be more precise – from Leibniz wheel. That animal was bigger than a man, and smaller than a room. Below is a juxtaposition of inventor himself with his creation (on the left). Metal computing technology miniaturized in time, and fit into a hand.

Curt Herzstark made really small mechanical calculator, named it Curta (on the right). Curta also lived long, well into the mid of XX century. Nowadays Curta is favorite collectible, priced at $1,000 minimum on eBay, while majority of price tags are around $10,000 for good working device, built in Lichtenstein.

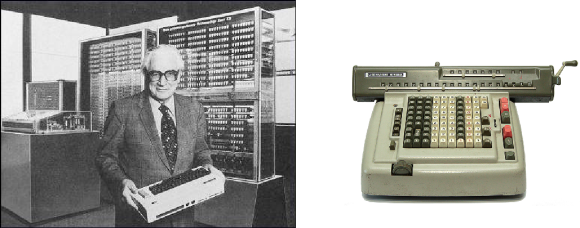

Babbage machine became a gym device, when Konrad Zuse designed first fully automatic electro-mechanical machine Z3. Clock speed was 5-10Hz. Z3 was used to model flatter effect for military aircrafts in Nazi Germany. And first Z3 was destroyed during bombardment. Z3 was bigger than a man, and smaller than a room (left photo). Then electro-mechanical computing miniaturized to desktop size, e.g. Lagomarsino semi-automatic calculating machine (right photo).

Here something new happened – growth beyond the size of a room. Harvard Mark I was big electro-mechanical machine, put in big hall. Mark I served for Manhattan Project. There was a problem, how to detonate atomic bomb. Well known von Neumann computed explosive lens on it. Mark I was funded by IBM, Watson Sr.

So, electro-mechanical computing started from the size bigger than a man, smaller than a room, and then evolved in two directions: miniaturized to desktop size, and grown to small stadium size.

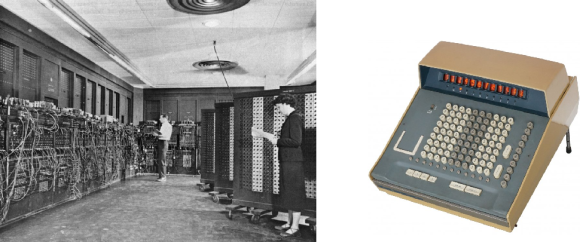

At some point, mechanical parts were redesigned to electrical, and first fully electrical machine was created – ENIAC. It used vaccum tubes. Its size was bigger than a man, smaller than a big room (left photo). The fully electrical computing technology on vacuum tubes got miniaturized to desktop size (right photo).

Very interesting and beautiful was miniaturization. Even vacuum tubes could be small and nice. Furthermore, there were many women in the indutry at the time of electrical vacuum tube computing. Below are famous “ENIAC girls”, with the evidence of miniaturization of modules, from left to right, smaller is better. Side question: why women left programming?

ENIAC was very difficult to program. Here is tutorial how to code the modulo function. There were six programmers who could do it really well. ENIAC was intended for balistic computing. But well known same von Neumann from atomic bomb project, got access to it and ordered first ten programs for hydrogen bomb.

Fully automatic electrical machines grew big, very big, bigger than Mark I, II, III etc. They were used for military purposes, and space programs. IBM SAGE on photo, its size is like mid stadium.

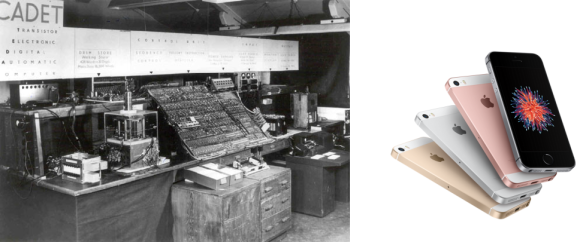

First fully transistor machine was build probably by IBM, though there is photo of European [second] machine, called CADET (left photo). There were no vacuum tubes in it anymore. Transistor technology is till alive, very well miniaturized to desktop and hand (right photo).

Miniaturization of transistor computing went even further, than size of the hand. Think of small contact lens, small robots in veins, brain implants, spy devices and so on. And transistors are getting smaller and smaller, today 14nm is not a big deal. There is dozen of silicon foundries capable of doing FinFET at such scale.

Transistor computers grew really big, to the size of the stadium. The Earth is being covered by data centers, sized as multiple stadiums. It’s Titan computer on photo, capable of crunching data at the rate of 10 petaFLOPS. The most powerful supercomputer today is Chinese Sunway TaihuLight at 34 petaFLOPS.

But let me remind the point: electrical transistor computing was designed at the size bigger than a man, smaller than a room, and then evolved into tiny robots, and huge supercomputers.

Designed at the size bigger than a man, smaller than a room.

Everything is a fridge. The magic happens at the edge of that vertical structure, framed by the doorway, 1 meter above the floor. There is a silicon chip, designed by D:Wave, built by Cypress Semiconductor, cooled to absolute zero temperature (-273C). Superconductivity emerges. Quantum physics start its magic. All you need is to shape your problem to the one that quantum machine could run.

It’s somewhat complicated excercise, like modulo function for first fully automatic electrical machines on vacuum tubes years ago. But it is possible. You got to take your time, paper and pen/pencil, and bring your problem to the equivalent Ising model. Then it is easy: give input to quantum machine, switch on, switch off, take output. Do not watch when machine is on, because you will kill the wave features of particles.

Today, D:Wave solves problems 10,000x faster than transistor machines. There is potential to make it 50,000x faster. Cool times ahead!

Why do we need such huge computing capabilities? Who cares? I personally care. Maybe others similar to me, me similar to them. I want to know who we are, what is the world, and what it’s all about.

The Nature does not compute the way we do with transistor machines. As my R&D colleague said about a piece of metal: “You raise the temperature, and solid brick of metal instantly goes liquid. Nature computes it at atomic level, and does it very very fast.” Today one of Chinese supercomputers Tianhe-1A computed behavior of 110 billion atoms during 500,000 evolutions… Is it much? It was only 0.1 nanosecond corresponding to real time, done in three hours of computing.

Let’s do another comparison for same number of atoms. It was about 10^11 atoms. If it was computed at the rate of 1 millisecond, then it would be only 500 seconds, less than 10 minutes. My body has 10 trillions molecules, or about 10^28 atoms. Hence, to simulate entire me during 10 minutes at the level of individual atoms, we would need 10^18x more Tianhe-1A supercomputers… Obviously our current computing is wrong way of computing. Need to invent further. But to invent further, we have to adopt new way of computing – quantum computing.

Who needs such simulations? Here is counter question – what is Intelligence? Intelligence is our capability to predict the future (Michio Kaku). We could compute the future at atomic level and know it for sure. The stronger intelligence is, the more detailed and precise our vision into the future is. As we know the past, and know the future, the understanding of time changes. With really powerful computing, we know for sure what will be in the future as accurately as we know what happened in the past. Distant future is more complicated to compute as distance past. But it is possible, and this is what Intelligence does. It uses computing to know the time. And move in time. In both directions.

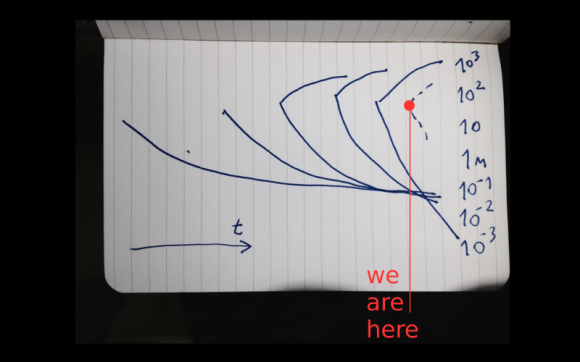

All computing technologies together, on one graph, show some pattern. Horizontaly we have time, from past (left) to future (right). Vertically we have scale of sizes, logarithmic, in meters. Red dot shows quantum computing. It is designed already, bigger than a man, smaller than a room. Upper limits are projected bigger than modern transistor supercomputers. Lower is unknown. It’s OK that both transistor and quantum computing technologies coexist and complement each other for a while.

All right, take a look at those charts, imagine quantum lines continuation, what do you see? It is Software is eating the World. Dragon’s tail is on the left, body is in the middle, and the huge mouth is on the right. And this Software Dragon is eating ourselves at all scales. Somebody calls it Digitization.

Software is eating the World, guys. And it’s OK. Right now we could do 10,000x faster computing on quantum machines. Soon we’ll be able to do 50,000x faster. Intelligence is evolving – our ability to see the future and the past. Our pathway to time machine.

Keeping 97 percent of the market is very lucrative. But it is also fragile, because nothing lasts forever. Intel keeps 97 (or even 98) percent of the server processor market. It was result of brilliant strategy some time ago, when Intel decided to adopt power saving strategy, while AMD was pursuing the clock speed. So we got plenty of Intels, Intel here, Intel there, Intel everywhere (like in So What song). Saving power was good strategy. AMD is off the server processor market.

During that time, outside of datacenters, smart phones and other hand held gadgets became mainstream as consumer devices. They were running on other [simpler] chips, like ARM and ARM derivatives. Multiple companies produced them. Everybody could license ARM design, add own stuff, customize it all and make own chip.

About 10 years ago, we got smartphone, equal by computing power to Apollo mission. Today our smartphones are computers that could phone, rather than phones that could compute. Mobile processors grew bigger and bigger, co-processors emerged. As result we got pretty equivalent of personal computer in small factor.

Why not using fat mobile processors in the datacenter, instead of more complicated and power greedy Intel ones? That was logical and somebody started to look there. Not for general use, but for high-performance computing, where GPU is not suitable (because of too small cores and memory copying inconveniences). Also for storage, especially cold storage.

Calxeda made big waves some time ago, in 2011. They designed really small servers named EnergyCore, which could be tightly packed into the 1U or 2U rack. After failing to sign a deal with HP, funding was cut, and Calxeda shut down. That sucks, because there was a need on the market (OK, there was at least logical evidence). We could have 480-core server, consisting of 120 quad-core ARM Cortex-A9 CPUs if it all didn’t flop. Most probably their processor was not jucy enough, hence declined by HP.

Others tried too at the same time. AppliedMicro announced X-Gene chip also back in 2011. The roadmap is long. Today we have X-Gene2, and powerful X-Gene3 which could battle Xeon E5 is scheduled to second half of 2017. Slowly but reliably it has started, ARM started to eat the datacenter with 64-bit ARMv8. Same performance at lower power consumption, and in significantly smaller factor [of the entire server].

What is coolest is SoC. All those ARMs are actually CPU plus infrastructure like memory channels, slots for disks, networking. It allowes to reduce the size of the entire server board dramatically. 1/3 of 1U rack could contain 6 ARM SoCs, each of 50-70 cores, which is equal to 300-400 processors per sled. Or almost 1,000 processors in 1U. Each core with good clock speed at 2.5GHz or so. With 2-3x less power consumption than Xeons. HP are building experimental ARM servers, not on Calxeda chips, but on AppliedMicro, check out Moonshot.

Cavium produced chips for network and storage appliances, and suddenly released jucy chip ThunderX, and jaws dropped. It was 48-core 64-bit ARMv8, with 2.5GHz clock speed. One of the biggest datacenters in the world – OVH – is running on ThunderX already. Recently Cavium redesigned it completely to ThunderX2. 54-core SoC, for high-density racks, not bad at all.

Intel builds Xeon Phi. They started from co-processor and moving to host/bootable processor, named Knights Landing. Still to be released. It should have ~260 cores, each core as small Pentium. So compatibility with Wintel era must be retained. For good or for bad? Compatibility was always burden, but it was always needed by the market. How to continue to run all those apps? SAP or Oracle or Windows may not run well on ARM today.

Intel produced less power greedy Xeon-D, especially for Facebook, Microsoft and Google needs. But it is really interesting what than Knights Landing aka KNL will be. There were some screenshots of the green screen and motherboard available. Premium equipment makers Penguin Computing announced both ThunderX and Xeon Phi support in their highly dense sleds. Check out ThunderX and Knights Landing sleds.

What should Intel do? They definitely have big plans, because spending ~$17B on Altera was well thought. Though is FPGA & IoT strategy well aligned with keeping datacenter hegemony? Good ruminations are assembled in the post by Cringely.

Without Qualcomm it is difficult to tell how it all will unfold. Some companies tried and flopped, like Calxeda, and $130 millions did not help. Some unusual players came in, like Cavium, and made noticable waves. AppliedMicro decided to build own processor. Amazon bought Annapurna to build own processor for AWS cloud (for ~$370 millions). There is some uncertainty still, what Amazon already made from that acquisition.

Qualcomm made some non-technical announcements, but still have to deliver the product. From that point, ARM eating the datacenter could accelerate and go mainstream. So waiting for aha moment. It must happen by mid 2017 or sooner. It is going to be at 10nm. And it is thrilling – what comes from Qualcomm?

I did not address POWER8 and POWER9 here, because nobody makes them except IBM themselves (though select semiconductors say on their sites they do power processors). Google experimented with POWER, RackSpace experimented with POWER. But RackSpace delayed Barreleye servers. And Google also experimented with ARMs, and were not so excited. Perhaps because that test chip was quite big and had only 24 cores.

It all points towards the ARM as new general purpose, HPC (where GPU is not applicable) and storage servers. And it all points to Qualcomm, they will be a cornerstone of datacenter revolution.

htop needs redesign, to properly display 500 processors that OS sees.

https://skillsmatter.com/skillscasts/8326-building-ai-another-intelligence

How AI tools can be combined with the latest Big Data concepts to increase people productivity and build more human-like interactions with end users. The Second Machine Age is coming. We’re now building thinking tools and machines to help us with mental tasks, in the same way that mechanical robots already help us with physical work. Older technologies are being combined with newly-created smart ones to meet the demands of the emerging experience economy. We are now in-between two computing ages: the older, transactional computing era and a new cognitive one.

In this new world, Big Data is a must-have resource for any cutting-edge enterprise project. And this Big Data serves as an excellent resource for building intelligence of all kinds: artificial smartness, intelligence as a service, emotional intelligence, invisible interfaces, and attempts at true general AI. However, often with new projects you have no data to begin with. So the challenge is, how do you acquire or produce data? During this session, Vasyl will discuss what the process of creation of new technology to solve business problems, and the strategies for approaching the “No Data Challenge”, including:

This new era of computing is all about the end user or professional user, and these new AI tools will help to improve their lifestyle and solve their problems.

https://skillsmatter.com/skillscasts/8326-building-ai-another-intelligence

…Then the Pterodactyl burst upon the world in all his impressive solemnity and grandeur, and all Nature recognized that the Cainozoic threshold was crossed and a new Period open for business, a new stage begun in the preparation of the globe for man. It may be that the Pterodactyl thought the thirty million years had been intended as a preparation for himself, for there was nothing too foolish for a Pterodactyl to imagine, but he was in error, the preparation was for Man… — Mark Twain

The Man. The man who won Tour de France seven times. Having reached the human limit of physical capabilities, he [and others] extended them. He did blood doping (by taking EPO and other drugs, storing own blood in the fridge, and infusing it before the competition for boosting the number of red blood cells, thus performance). He [and others] took anti asthmatic drugs to increases performance on endurance. And so on, so on. There are Yes or No answers from Lance himself from Oprah’s interview.

Is Lance cheater? Or is Lance hero? I consider him a hero for two reasons. First, he competed against the same or similar. Second, he went beyond the human limits, cutting-edge thinking, cutting-edge behavior, scientific sacrifice, calculated or even bold risk.

What could be said about all other sportsmen? I think the sporting pharmacology is evolutionary logical stage for the humankind to outperform our ancestors, to break the records, to win, and continue winning. If sportsmen are specialized in competing, and society wants them competing, then everything all set. Evolution goes on, biological meets artificial chemical. It improves the function, it solves the problem. Though it slightly distance biological ourselves from what we though we were.

It happens that people lose body parts. It is right way to go to give them missing parts. It’s still very complicated, the technologies involved are still not there, but good progress has been made. There are new materials, new mechanics, new production (digital manufacturing, 3D printing), new bio-signal processing (complex myogram readings), new software designed (with AI), and all together it gives tangible result. Take a look at this robot, integrated with the man:

Some ethical questions emerge. The man with prosthetic body part is still a biological being? What is a threshold between biological parts and synthetic parts to be considered a human being? There are people without arms and legs, because of injuries or because of genetic diseases, like Torso Man. We could and should re-create the missing parts and continue living as before, using our new parts. Bionic parts must evolve until they feel and perform identically to original biological parts.

It relates to invisible organs too. The heart, which happen to be a pump, not a soul keeper. People live with artificial hearts. Look at the man walking out from hospital without human heart. The kidneys, which are served by external hemodialysis machines. New research is performed to embed kidney robots into the body. Ethical questions continue, where is a boundary what we call a ‘human’? Is it head? Or brain only? What makes us human to other humans?

We are defined by our genes. Our biological capabilities are on genes. Then we learn and train to build on top of our given foundation. We are different by genes, hence something that is easy for one could be difficult for another. E.g. since childhood sportsmen usually have better metabolism in comparison to those who grow to ‘office plankton’.

There are diseases caused by harmful mutations on genes. Actually any mutation is bad, because of unpredictable results in first generation with new mutant [gene]. But some mutations are bad from generation to generation, called genetic disease. It is possible to track many diseases down to the genes. There are Genetic Browsers allowing to look into the genome down to the DNA chain. Take a look at the CFTR gene, first snapshot is high-level view, with chromosome number and position; second is zoomed to the base, with ACGT chain visible.

If parents with genetic disease want to prevent their child from that disease, they may want to fix the known gene. Everything else [genetically] will remain naturally biological, but only that one mutant will be fixed to the normal. The kid will not have the disease of ancestors, which is good. A question emerges: is this kid fully biological? How that genetic engineering impacts established social norms?

What if parents are fans of Lance Armstrong and decide to edit more genes, to make their future kid a good sportsman?

Digging down to the DNA level, it is very interesting to figure out what is possible there to improve ourselves, and what is life at all. How to recognize life? How would we recognize life on Mars, if it’s present there?

Here is definition from Wikipedia: “The definition of life is controversial. The current definition is that organisms maintain homeostasis, are composed of cells, undergo metabolism, can grow, adapt to their environment, respond to stimuli, and reproduce.” The very first sentence resonate with questions we are asking…

Craig Venter led the team of scientists to extract the genetic material from the cell (Mycoplasma genitalium), instrumented its genome by inserting the names of 20 scientists and the link to the web site, implanted edited material back into the cell, observed the cell reproducing many times. Their result – Mycoplasma laboratorium – reproduced billions times, passing encoded info through generations. The cell had ~470 genes.

What is absolutely minimum number of genes, and what are those genes, to create life? Is it 150? Or less? And which one exactly? What are their specialization/functions? It’s current on-going experiment… Good luck guys! Looking forward to your research success, and what is Minimum Viable Life (MVL). BTW by doing this experiment, scientists designed new technologies and tools, allowing to model the genes programmatically, and then synthesize them at molecular level.

While somebody are digging into the genome, others are trying to replicate humans (and other creatures) at macro level. Most successful with humanoid machines are Boston Dynamics.

How far we are to make them indistinguishable from humans? Seems that pretty far. The weight, the gravity center, motion, gestures, look & feel are still not there. I bet that humanoids will be first create in military and porn. Military will need robots to operate plenty of outdated military equipment, serve and combat in hazard environments. it’s only old weaponry that require manned control. While new weapons are designed to operate unmanned. Porn will evolve to the level that we will fuck the robots. For military it’s more the economical need. For our leisure it’s romantic need and personal experience.

The size and shape of robots doing mechanical work is so different. From tunnel drilling monsters to blood vessels…

If we look for the commonality in mentioned (and several unmentioned) disrupting technologies, we could select 8 of them (extended and reworked 8 directions of Singularity Univeristy), which stand out:

As we slightly covered Biology, Medicine and Robotics already, more to be said about the rest. But before than, few words about Biotech. We could program new behavior of the biomass, by engineering what the cells must produce, and use those biorobots to clean the landfills around the cities, sewerage, rivers, seas, maybe air. Biorobots also could clean our organisms, inside and outside. Specially engineered micro biorobots could eat the Mars stones and produce the atmosphere there. Not so fast but feasible.

Well, more words about other disrupting technologies. Networks and Sensors next. First of all – it’s about networks between human & human, machine & machine, human & machine. The network effect happens within the network, known as Metcalfe’s Law. Networks are wired and wireless, synchronous and asynchronous, local and geographically distributed, static and dynamic mesh etc. Very promising are Mesh Networks, allowing to avoid Thing-Cloud aka Client-Server architectures, despite all cloud providers pushes for that. Architecturally (and common sense) it’s better to establish the mesh locally, with redundancy and specialization of nodes, and relay the data between the mesh and the cloud via some edge device, which could be dynamically selected.

Sensors will be everywhere. Within interior, on the body, as infrastructure of the streets, in ambient environment, in the food etc. Our life is improved when we sense/measure and proactively prepare. We used to weather forecasts, which are very precise for a day or two. It’s because of huge amount of land sensors, air sensors, satellite imagery. Body sensors are gaining popularity, as wearables for quantified self. There are recommendations for the lifestyle, based of your body readings. It’s early and primitive today, but it will dramatically improve with more data recorded and analyzed. Modern transportation requires more sensors within/along the roads and streets, and cars. It’s evolving. Miniaturization shapes them all. Those sensors must be invisible for the eyes, and fully integrated into the cloths and machines and environment.

3D Printing. The biggest change is related to ownership of intellectual property. 3D model will be the thing, while its replication at any location on demand on any printer will be commodity function. Many things became digital: books, photos, movies, games. Many things are becoming digital: hard goods, food, organs, genome. It’s a matter of time when we have cheap technology capable to synthesize at the atom grid level and molecular. New materials are needed everywhere, especially for human augmentation, for energy storing and for computing.

Nanotech. We learn to engineer at the scale of 10^-9 meter. From non-stick cookware and self restoring paint (for cars), to sunscreen and nanorobots for cleaning our veins, to new computing chips. Nano & Bio are very related, as purification and cleanup processes for industry and environment are being redesigned at nano level. Nano & 3D Printing are related too, as ultimate result will be affordable nanofactory for everyone.

Computing. We’re approaching disruption here, Moore’s Law is still there but it’s slowing down and the end is visible. Some breakthrough required. Hegemony of Intel is being challenged by IBM with POWER8 (and obviously almost ready POWER9) and ARM (v8 chips). Google is experimenting with POWER and ARM. it’s true, Qualcomm is pushing with ARM-based servers. D:Wave is pioneering Quantum Computing (actually it’s superconductivity computing). There is good intro in my Quantum Hello World post. IBM recently opened access to own quantum analog. The bottom line is that we need more computing capacity, it must be elastic, and we want it cheaper.

Artificial Intelligence. AI deserves separate chapter. Here it is.

I blended my thoughts and my impressions from The Second Machine Age, How to Think About Machines that Think, forthcoming The Inevitable, and various other sources that had impact on me.

The purpose of AI was machine making decisions ( as maximization of reward function). But being better at making decisions != making better decisions. Machine decide how to translate English-to-Ukrainian, but not speaking either language. Those programs (and machines) are super screwdrivers, they don’t what to do, we want them to do, we put our want into them.

AI is different intelligence, human cannot recognize 1 billion humans, even really having seen them all many times. AI is Another Intelligence so far. The shape of thinking machines is not human at all: DeepBlue – chess winner – is a toll black box; Watson – Jeopardy winner – 2 units of 5 racks of 10 POWER7 servers between noisy refrigerators in nice alien blue light (watch from 2:20); Facebook Faces – programs and machines recognizing billions of human faces – it’s probably big racks in data center, Google Images – describing context of the image – big part of the data center (detection of cat took 16,000 servers several years ago); Space Probes – totally different from both humans and black toll boxes in the data centers.

BTW if somebody really spots UFO visiting our planet, don’t expect green men, as organics is poor for space travel, because of dangerous +200/-200 Celsius temperature range, ultra violet and radiation, time needed for travel (even through the wormhole)… That UFO is a robot most probably. Or intelligence on non-biological carrier, which means post-biological species (which is worse for us if so).

Our wet brain operates at 100 Watts, while the copy of the simulation of the same number of cells requires 10^12 Watts. Where on Earth will we get 1 trillion watts just for equivalent of one human intelligence? Even not intelligence, but connectivity of the neurons. Isn’t it ridiculous pseudo architecture? We still did not isolate what we call consciousness, and we don’t know it’s structure to properly model it. Brain scanning is in progress, especially for deeper brain. And this Eureka moment, like we got with DNA, is still to come.

We’re remaining at the center, creating and using machines for mental work, like we created and used/use machines for physical work. Humans with new mental tools should perform better than without them. Google is a typical memory machine, and memory prosthesis. Watson as a layer or a doctor is a reality.

Back from the future, at present we have intelligent machines – governments and corporations. We created those artificial bodies many years ago, and just don’t realize they are true intelligent machines. They are integrated into/with society, with law evolved through precedents and legislation, tailored to different locations and cultures. Culture itself is a natural artificial intelligence. Global biological artificial intelligence emerged on politicians, lawyers, organizations like United Nations and hundreds of smaller international ones. They are all candidates for substitution by programs and machines.

Interesting observation is that most intelligent humans neither harmful nor rulers of others. Hence we could assume that really smart AI will not be harmful to humans, when AI will be approximately at our level. But it’s uncertain about accelerated and grown AI later in time. Evolution will shape AI too, continuing from invisible interfaces with machines right now. We could stop clicking, typing, tapping into machines, and talk to them like we do between ourselves. Today we have three streams of AI: < 3yo AI, Artificial Smartness, Intelligence as a Service.

We are what we eat, hence they will have to eat us? Hm… Real AI will not reveal itself. And most probably they will leave, like we left our cradle Africa…

There were some concerns that we had slowed down, by observations and perception of the daily facts. But it’s also visible that several technologies are booming and disrupting our lives almost on weekly basis. Those 8 mentioned earlier technologies in section It All Together. Those technologies are developing exponentially.

The companies are highly specializing within their niches, performing at global scale. Global economy is changing. Few best providers of the narrow function do it world-wide. E.g. Google is serving search globally, with two others far behind (Baidu and Bing, with artificial restriction of Google in China). Illumina chips are used for gene sequencing (90 percent of DNA data produced). Intel chips are primary host processors in the servers. Nvidia are primary coprocessors and so on. Few companies fulfill the 95+ percent of the needs within some niche. Where this has not happen yet, big disruption is expected soon.

This is pure specialization of work at global scale. Shift from normal distribution to power distribution. Some may say that it’s path to global monopolism, with artificially hold high costs. But in fact it is not, as Google search is free. Illumina is promising full human genome sequenced under $1,000. And Intel still ships new chips according to Moore’s Law, 2x productivity per $1 every 1.5 year.

As global specialization reduces global costs, because same functions and products are produced more efficiently on same resources, it is good for our planet, with limited resources. But here another thing happens, we are not preserving resources, we are using them for creating new technologies, which are expensive, unique, disrupting. Provider of such new technology (and product, service) is not a monopolist, because of small scale/capacity at the beginning. Either they scale or others replicate it, and true leader emerges and make it globally. Also new ways for energy are found, from Sun and wind, and new nuclear too. We’re creating more wealth.

Scaling globally is dramatically easier and cheaper for digital products and services, than for physical/hard or hybrid. It is main motivator for digitization of everything. Software is eating the world, because it is simply cheaper to deliver sw vs. hw. Everything will become software, except the hardware to run the software, and power plants to empower the hardware.

Real life is becoming digital very fast. Why we’re taking photos of our meals and rooms, self faces and legs, beautiful and creepy landscapes, compositions? Why we checkin, express status, emotions for others’ expressed statuses, commenting, trolling and even fighting digitally? We also voting, declaring, reporting, learning, curing, buying and consuming, entertaining digitally too. We’re living digitally more than physically sometimes. Notice how people record the event looking at their smartphone small screen instead of looking at the big stage and experience it better. Some motivation drives us to record it to multiple phones, from multiple locations, aspects, angles, distances, and push it into the internet, and share with others. Then see it all from those recordings, own and theirs. Why is it happening? Why we are shifting to digital over natural? Or digital is new natural, as evolution goes on?

Kit Harington was stopped by cop for speeding. The cop made ultimatum – either driver pays fine, or he tells whether Jon Snow is alive in next season. The driver avoided the speeding ticket by telling the virtual/digital story to the cop. For the cop digital virtual was more important than physical biological. Isn’t it natural shift to new better reality?

Many people live is virtual worlds today. Take American and ask about ISIS. Take Syrian and ask about ISIS. Take Ukrainian and ask about Crimea and Donbass. Take Russian and ask about Crimea and Donbass. Same for Israel and Palestina. People will tell opposite everything. People are already living in virtual worlds, created by digital television and internet. Digitization of life is here already, and we are there already.

Specialization is observed at all levels. Molecules specialized into water, gases, salts, acids. Bigger molecules specialized into proteins and DNA. Then we have cells, stem cell and their specialization into connective tissue, soft tissue, bone and so on. Next are organs. Then body parts. Specialization is present at each abstraction level. At the level of people specialization is known as roles and professions. Between businesses and countries it is industries. Between nations it is economics and politics.

It looks like we are part of the bigger machine, which is evolving with acceleration. We are like cells, good and bad, specialized from vision to thinking. Roads, pipes are like transportation systems for other cells and payload. Internet (copper and fiber) is more like a neural system. Connectivity is a true phenomenon. We are now fully disconnected (and useless) without smartphone, or without digital social network in any form. Kevin Kelly once called it the One. The Earth of many people will evolve into earth of augmented people and machines, they all specialize and unite into the One.

And since the One, it all looks like just a beginning. I feel another One, and more cells-ones, organizing something more complex and intelligent from themselves. If our cells could specialize and unite into 10 trillions and walk, think, write, why it can’t be possible with bigger cells like One, at bigger scale like Galaxy?

The Man is not the last smart species on Earth. In other words, there will be a day, when the Last [current] Man on Earth goes extinct. What will happen faster: transhuman or true AI, that could replicate and grow? I bet on transhuman. Better for humanity too. For now.

Young Schumacher crashed into Senna badly. Senna already was a living legend by that time.

When Schumacher scored 41 victories, like Senna, who was killed in Imola 1994. Then Schumacher significantly outperformed everybody, winning 5 more titles in a row, and became a legend himself.

10,000 times more productive than Xeons at same power consumption levels. With potential to 50,000x.

Schrödinger’s cat lives. Quantum is not micro anymore, it is macro, it is everything.