Intelligence

What is intelligence? We know it as IQ. But not so many know what the “Q” is. Q stands for quotient. IQ is Intelligence Quotient. IQ was considered as a measure of intelligence of the person. Until other Qs kicked in. There are many of them: MQ PQ AQ BQ EQ DQ HQ FQ WQ SQ… Body intelligence, Health intelligence, Practical intelligence, Moral intelligence and so on, and so forth. I am sure they will run out of the letters of English alphabet, by labeling newly discovered/isolated intelligences.

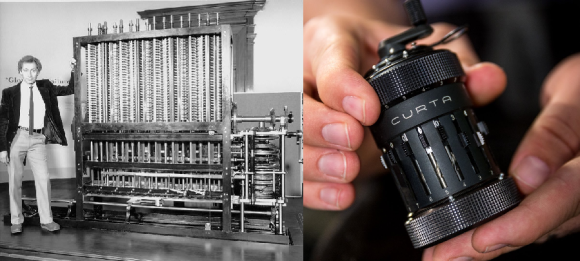

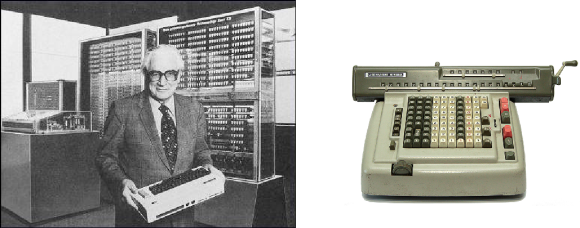

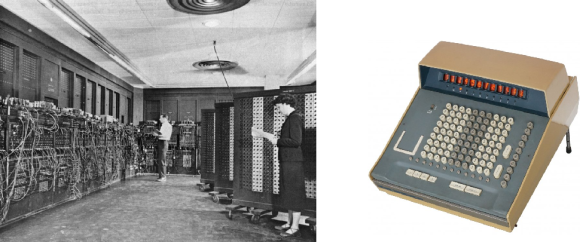

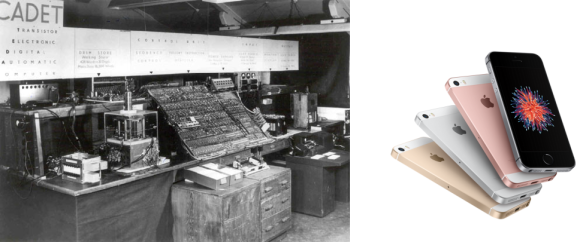

It is possible to identify if some of those Qs are absent [due to damage or disease]. By augmenting the impaired humans with intelligent tools, we could compensate for some Qs deficit. The same and similar technologies & tools (especially mental) could be pushed to the limit, and used by all people – to help us all – to flourish in the second machine age. It looks to me that recreation of narrow intelligence is nothing else than the building of new tools, but not building the real [human or stronger] intelligence. It is extending ourselves, not replicating ourselves.

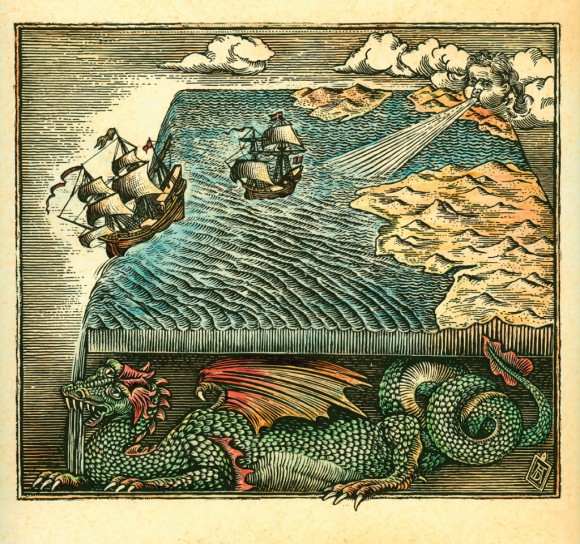

I like this definition [by Michio Kaku?] of Intelligence. Intelligence is our capabilities to firmly predict the future. I would re-phrase it to our capabilities to firmly predict the future & the past. Because the history is usually written by those in power, screwed and warped for the sake of their benefit. Hence the abilities to see/know the past & future – in high resolution – is the intelligence. We could compute it. The method doesn’t matter. The further and the more firmly we see the future, the more intelligent we are. The ultimate intelligence (as of today) would be trully seeing in high resolution the entire Light Cone of our world.

Humans & Intelligence

It seems like the humans are the most intelligent species out there, on our planet Earth. Maybe we are like small ants near the leg of the huge elephant, not seeing the elephant… But it’s OK to look to what we see, the less intelligent species. It is relevant to think about this into more details. What is a human? What is a minimum livable human? What makes the human intelligent?

This is strict. There are humans without limbs, because of injuries, diseases (including born). There are humans without organic heart, with electro-mechanical pump. There are humans without kidneys on dialysis. There are humans who could not see or hear. And it leads us to the human brain. As long as the brain is up and running – it makes a human the human.

I don’t know if it’s brain alone, or brain plus spinal cord. But it is clear enough, as long as the brain works as expected, we accept the human as a peer. The contrary is right too, the people with damaged brain, but with human body – we accept them as humans. But here we are talking about intelligent human, human intelligence. So the case with the brain is what we are interested in.

Human Brain

Human Brain on the Right. More on Wikipedia.

This is still difficult. On the one side, we know pretty well, what our brain is. On the other side, we don’t know deep/good enough what our brain is. It is even difficult to explain the size vs. intelligence. How the small brain of grey parrot could provide intelligence on par with much bigger brain of some chimps? Or how the smaller human brain produce bigger intelligence than 3x bigger elephant’s brain. This lead us to the thinking about form/structure vs. function. Probably the structure is more in charge of intelligence than the size?

Very interesting hypo about the wiring pattern is called cliques & cavities. The function could be possibly encoded into directed graphs of neurons. The connections could be unidirectional, bidirectional. And they could compute [locally] something relevant, and interop with other cliques at the higher level. The cliques could encode/process something like 11-dimensional “things”. Who wants to check out whether recent Hinton’s capsules are similar to those cliques?

The #1 problem is the absence of brain scanners, that could scan the brain deeply and densely enough, without damaging the brain. If we could have the brain scans [electricity, chemistry] at all depth levels, down to the millisecond, it would help a lot. Resolution down to the nanosecond would be even better… But we don’t have such scanners yet. Some scanning technologies damage the brain. Others are not hires enough. Maybe Paul Allen’s Brain Institute could invent something any time soon.

Enlightenment

20 years ago something bright was discovered in the rats brain. The light was produced by the rats brain. Since that time, there is still no confirmation that the light is produced by the human brain too. But there is confirmation that our axons could transmit the light. So we do have fiber optics capabilities in our brains. It was measured that human body emits biophotons. Based on the detection of light in mammalian brain, and fiber optics in our brain, we could propose hypothesis that [with big probability] our brain also uses biophotons. It’s still to be measured – the biophotonic activity in the human brain.

Even if the light is weak, and fired once per minute, the overall simultaneous enlightenment of the brain is rich for information exchange. It would be huge data bandwidth jump, in comparison to the electrical signals. There is a curious hypo, that specifics of light transmission is what significantly distinguishes the human brain from other mammalian brains. Especially the red shift.

The man without brain introduced many questions. What is the minimum viable brain? Do our brains transmit only electricity, or the big deal in data exchange is carried by light?

When could we confirm the light in the brain? Not soon enough. We banned experiments on cats, to study the mammalian vision & perception. The experiments on the human brain are even more fragile, ethically. Not expecting any breakthrough any time soon…

What we have today is modeling of the cortex layers, as neurons and electrical signals between them, bigger or smaller, depending on the strength of the connections. Functionally, it is modeling of perception. It may look as there is some thinking modeled too, especially in playing games. But wait. In Go game the entire board is visible. In Starcraft the board is not fully visible, and humans recently won from the machines. More difficult than Go is Poker, and Poker winner is Libratus. Libratus is not based on neural nets, it works on counterfactual regret minimization (CFR).

We lack experiments, we lack scanning technologies. We advanced in simulation of perception only, with deep neural nets. Typologies are immature, reusability is low. And those neural nets transmit only abstraction of electricity, not the light.

Learning from Data

Machine Learning is the algorithmic approach, when a program is capable to learn from data. Machine Learning allowed to solve old same problems better. Most popular today is Deep Learning, subset of Machine Learning. To be specific, deep learning allowed to break through in computer vision and speech processing. Today, such routine tasks as image and speech classification/transcription is cheaper and more reliable by machines, than by humans.

Most popular deep learning guys are so called Connectionists. Let’s be honest – there is big hype around deep learning. Many people even don’t know that there are several other approaches to machine learning, besides deep neural nets. Check out the good intro and comparison of machine learning by Pedro Domingos (author of The Master Algorithm). Listen to the fresh stuff from Symbolists Gary Marcus (former Uber) and Francesca Rossi (IBM). Hear fresh Evolutionists stuff from Ilya Sutskever (OpenAI, soon Tesla?) Hear from Analogizers, Maya Gupta (Google). Check out for fresh stuff from Bayesians. Ben Vigoda (Gamalon) on Idea Learning, instead of Deep Learning, Ruslan Salakhutdinov (Apple), Eric Horvitz (Microsoft). Book the date to listen to Zoubin Ghahramani (Uber).

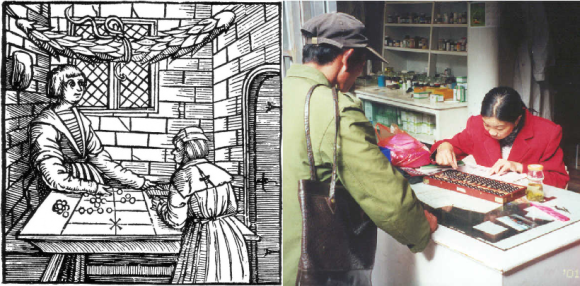

Each machine learning approach gives us a better tool. It is a dawn of the second machine age, with mental tools. Very popular and commercialized niche nowadays. Ironically, all shit data produced by people, converts from useless into useful. All those pictures of cats, food and selfies have become a training data. Even poor corporate powerpoints are becoming training data for visual reasoning. And this aspect of the data metamorphosis is joyful. Obviously this kind of intelligence eats data, and people produce the data to feed it. This human behavior is nothing else as working for the machines, that feels fun. Next time you snap your food or render a creepy pie chart – think that most probably you did it for the machines.

Maybe combination of those approaches could give break through… This is known as a search for the holy grail – master algorithm – for machine learning. To combine or not to combine is a grey area, while the need in more data is clear. Internet of Things could help, by cloning the old good world into its digital representation. By feeding that amount [and high resolution] of data to machines, we could hope, they would learn well from it. But there is no IoT yet, there is Internet and there are no Things. IPv6 was invented specifically for the things, and still not rolled out here or there. Furthermore, learning from data will be restricted by relative shortage of data access. The network bandwidth growth rate is slower than the data growth rate – hence less and less data can make it thru the pipe… Data Gravity will emerge. To access the data, you will have to go to the data, physically, with your tools and yourselves. Data access will be bigger & bigger issue in the years to come. Any better pathway towards creating Intelligence?

Building Complexity

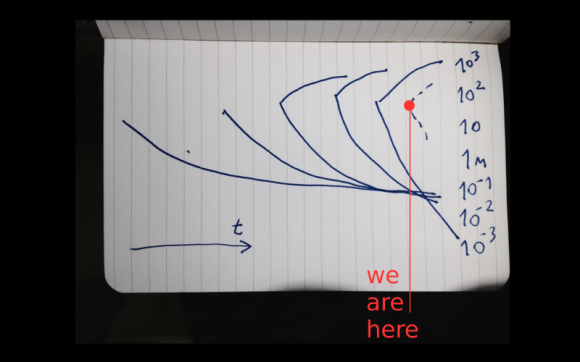

How the intelligence emerged on this planet? It was gradually built, during very long evolution. The diversity and complexity increased in time. We could observe/analyze complex systems emerging over scale and self-organizing over time. Intelligence is a complex system [I think so]. And complex system could do more than only perceive. How? By building/evolving those capabilities. It is very similar to creation of new technology. Everything is possible in this world, just create the technology for that. Technology could be biological, could be digital, whatever. It gives capabilities to do something, that intelligence wants to do. Hence intelligence evolves towards creation of such capabilities. And this repeats and repeats. As result the intelligence grows bigger and bigger.

It worth looking at the place of what we call Artificial Intelligence among other Complex Systems. What I call Intelligence in this post – is what Complex Adaptive Systems do – emergence over scale and self-organization over time. Intelligence could be observed at different levels of abstraction. How 10 trillions molecules emerged and organized to move altogether 1 meter above the ground? How human brain modules or neurons comprehend and memorize? How humanity launch the probe from the Pale Blue Dot outside of the Solar System?

Complexity is not so scary as it looks. There could be no master plan at all, though there could be master config with simple rules. Like the speed of light is this, gravitational constant is that, minimal energy is this, minimal temperature is that and so forth. This is enough to build some enormous and beatiful complexity. Let’s look at the single dimensional primitive rules, and the “universes” they build.

Wolfram Rule 30 will be first. In all of Wolfram’s elementary cellular automata, an infinite one-dimensional array of cellular automaton cells with only two states is considered, with each cell in some initial state. At discrete time intervals, every cell spontaneously changes state based on its current state and the state of its two neighbors. For Rule 30, the rule set which governs the next state of the automaton is: current pattern 111 110 101 100 011 010 001 000, new state for center cell 0 0 0 1 1 1 1 0. Very similar evidence could be observed in nature, on the shell of mollusk.

Wolfram Rule 110. It is an elementary cellular automaton with interesting behavior on the boundary between stability and chaos. Current pattern 111 110 101 100 011 010 001 000, new state for center cell 0 1 1 0 1 1 1 0. Rule 110 is known to be Turing complete. This implies that, in principle, any calculation or computer program can be simulated using this automaton. It is lambda calculus. Hey Python coders, ever coded lambda function? You could compute on the cyclic tag graphs.

Wolfram Rule 110 is similar to Conway’s Game of Life. Also known simply as Life, is a cellular automaton, a zero-player game, meaning that its evolution is determined by its initial state, requiring no further input. One interacts with the Game of Life by creating an initial configuration and observing how it evolves, or, for advanced “players”, by creating patterns with particular properties.

Complexity could be built with simple rules from simple parts. The hidden order will reveal itself at some moment. Actually, the Hidden Order is a work by John Holland, the Evolutionist(?). We need more diverse abstractions, that do/have aggregation, tagging, nonlinearity, flows of resources, diversity, internal models, building blocks – that could become that true Intelligence. Maybe we already built some blocks, e.g. neural nets for perception. Maybe we need to combine growing stuff with quantum approach – probabilities, coherence and entanglement? Maybe energy worth more attention? Learn how to grow complexity. Build complexity. Over scale & time may emerge Intelligence.

PS.

This was my guest lecture for the 1st year students of Lviv Polytechnic National University, Computer Science Institute, AI Systems Faculty. Many of them, all young, open for thinking and doing.